A few months ago Katie Hinde posted the story she told her anthropology students in the first class of the semester. Eschewing a run-through of the syllabus, she instead illustrated the overarching themes of her course with a compelling tale of species-hopping disease outbreaks and the cultural behaviours and conflicts that shaped both the course of the outbreak and its aftermath. It’s pretty awesome. You should read it.

Like Katie, I’ve been telling a story at the beginning of the introductory geology course I teach, called How the Earth Works, for a couple of years now. I’m not going to claim it’s as good a story, but I like to think it gives a flavour of the kinds of stories you can tell about the Earth, if you know how to look: stories of how the world slowly remakes itself over hundreds of millions of years, of how the very high was once the very low, and will be again. But I’ve never written it down, which probably means that I don’t actually tell it as well as I could. So to kick off this semester, I thought I’d tell it properly.

It starts, unsurprisingly, with a rock. Rocks are witnesses to the ‘crime’ of Earth history. Geologists are the detectives, trying to tease clues out of the rocks to try and work out what happened, when, and why. Today’s person of lithological interest is from a very special place:

Mount Everest viewed from the southeast.

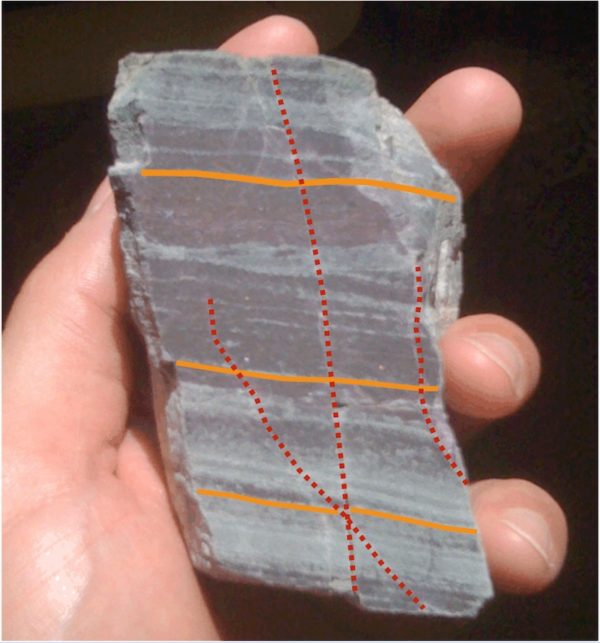

About 20 feet below the summit of Mount Everest, almost 9 kilometers above sea-level, you find an outcrop of rock that looks like this.*

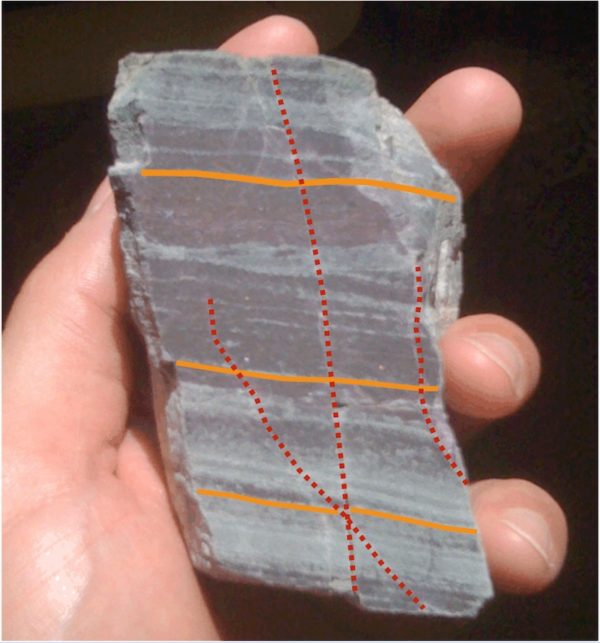

So what secrets does rock taken from the roof of the world hold? Two basic features stand out:

- It is layered. The dark and light grey bands have been formed by mineral grains slowly settling to the bottom of an ocean, sea, or lake over thousands of years. This is a sedimentary rock.

Layers.

- It is fractured. There are cracks, and the originally continuous sedimentary layers are offset across them. This rock has been deformed.

Fractures.

These simple observations already tell us a lot, but with a bit more detail, and a little specialist knowledge, we can start to tell a much more vivid story. The next question to ask is: what are the grains that this sedimentary rock is built from like? Firstly, they are extremely small – it’s actually pretty much impossible to pick out individual grains in this picture, because they are only fractions of a millimetre across. This, in itself, tells us something important: such small grains, which are easily wafted away by even the weakest current, only settle out of the water column somewhere very sheltered, or deep enough that the water is undisturbed by even the strongest storms on the surface.

Different minerals have different properties, like colour, and hardness; some minerals are very common in some kinds of rocks, and not in others. Clues such as these point to the teeny tiny, dull grey mineral grains in this rock being made of calcium carbonate (most calcium carbonate is in the form of a mineral called calcite; in this rock it is in a slightly different form with some magnesium mixed in, called dolomite). Most calcium carbonate in the geological record is produced by living organisms, who use it to build protective shells. But the very fine grained crystals we see here are not shell fragments; they are individual grains that precipitated directly out of the water before settling onto the sea bed. This gives us yet more useful information: the right conditions for the calcium and bicarbonate ions dissolved in seawater to spontaneously crystallise into new mineral grains only occur in a few places. Here’s one such place:

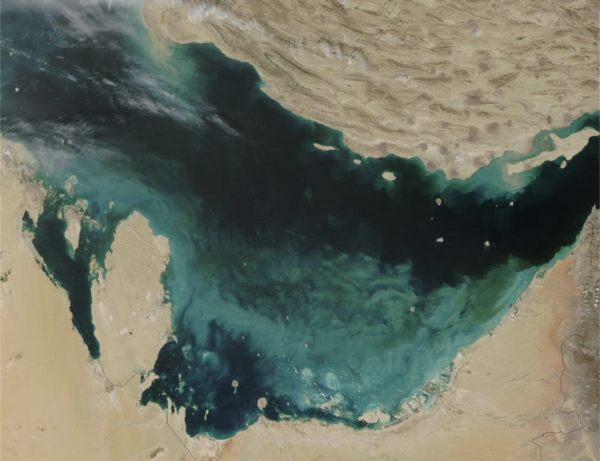

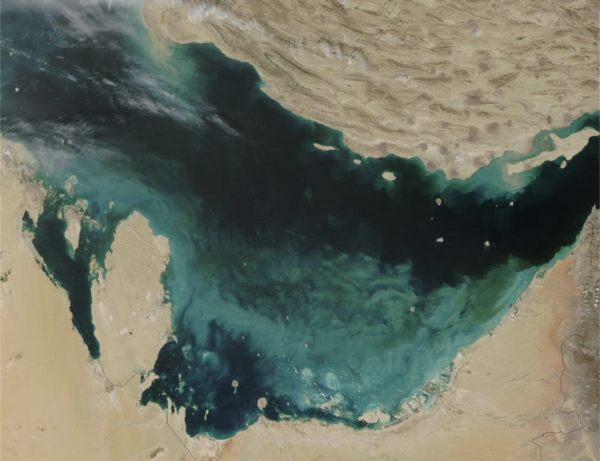

Satellite image of the Persian Gulf, with ‘whitings’ (small bright white patches) produced by carbonate formation in the shallow waters off the coast of the United Arab Emirates. The larger-scale cloudiness is probably the result of a phytoplankton bloom. Via the USGS

The Persian Gulf is a warm, shallow sea. In the summer, biological activity (photosynthesis removes CO2 and makes ocean water less acidic) and concentration of dissolved ions by evaporation make it possible to spontaneously precipitate calcium carbonate – the small bright white patches in the satellite image above are basically clouds of small carbonate crystals, suspended in the water, that will eventually settle and accumulate to form limestone on the sea bed.

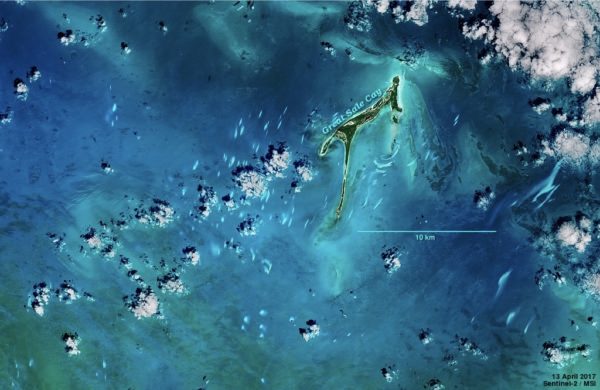

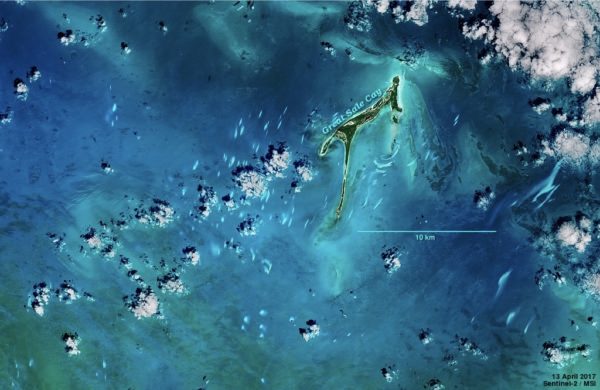

Here’s another place where something similar is happening: the Bahamas.

Small patches of whitings in the Northern Bahamas.

In some places, changes in sea-level have exposed the resulting rock above sea-level. Look a little familiar?

Note the conditions these two places have in common: calm, warm, shallow water. One of the key geological ideas that we will explore in this course is the principle of uniformitarianism: the notion that we can understand past rocks in terms of the processes that shape the modern Earth. So if we see a rock that looks like something that forms today in warm, shallow tropical seas, we expect that that rock also formed in warm, shallow tropical seas. Let’s just remind ourselves where we actually found it.

*record scratch* *freeze frame* Yup, that’s me. You’re probably wondering how I ended up in this situation.

Clearly, something rather dramatic has happened to this rock since it was formed, that has lifted it at least 9 kilometres into the air. And the forces that conspired to do this have actually left their mark on our rock, in the form of fractures we’ve already noted.

Nowadays, most people know how the Himalayas formed: from the collision of two continents, as a result of plate tectonics. In the world’s biggest and slowest car crash, India is moving north into the space occupied by Asia, and the crust in the collision zone is crumpling up, creating the worlds highest mountains.

Reconstructed motion of India in the last 70 million years. From the USGS.

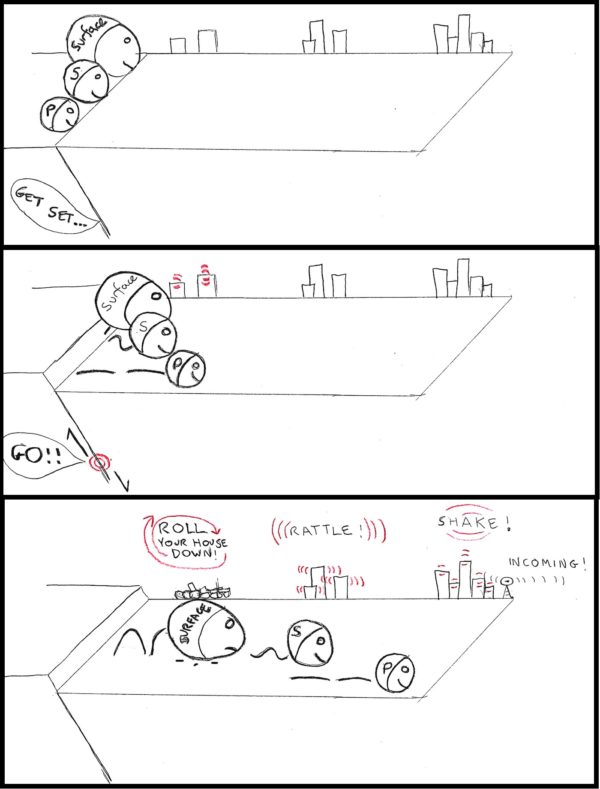

Uniformitarianism allows us to unravel the what of Earth history; plate tectonics is what allows us to understand the why. Why are rocks that formed at the bottom of the ocean now at the roof of the world? Because the Earth’s surface is spilt up into a constantly morphing jigsaw puzzle. As the pieces – the rigid plates – jostle and slide against each other, you get earthquakes, volcanoes, and a dramatic reshaping of the Earth’s surface. Where two plates divide, you get new oceans; where two plates collide, you get mountain belts.

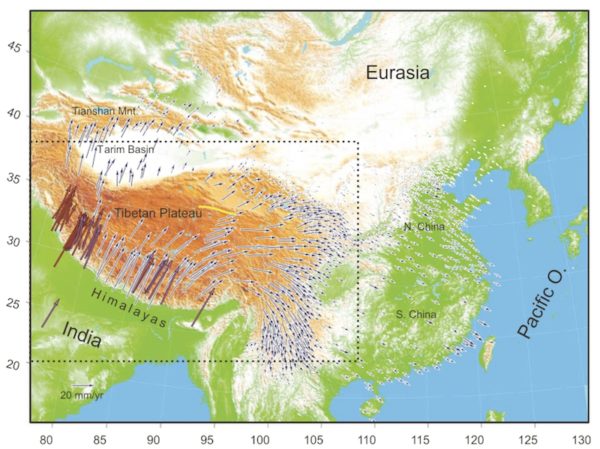

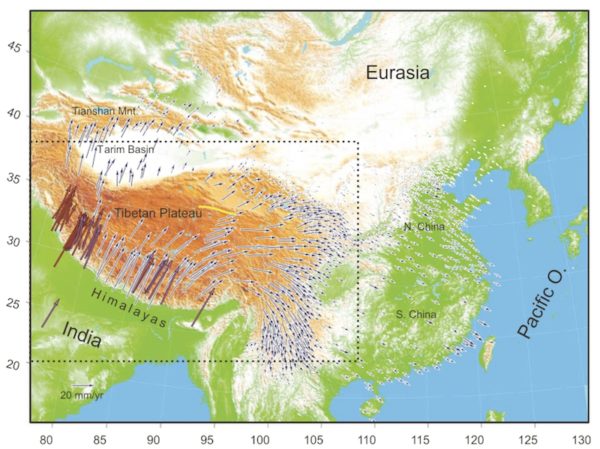

We’ll tell the story of how we know this in a couple of weeks. It was a hard-won insight, and a recent one, too: the geologists who taught me – and despite the grey hairs, I’m not that old – actually lived through the discovery. Many of them have now also lived to see us develop the ability to see plate tectonics happening, almost in real time. The GPS in your phone allows you to find the nearest coffee shop, or hail an Uber; but attach a GPS unit to solid rock, and leave it for a few years, and you can observe some bits of the Earth are moving steadily across the Earth’s surface relative to other bits, at rates of a few centimetres a year. The map below shows how India is still pushing into Asia at about 4 centimetres a year. That may not seem like much, but at that rate it can travel about 2,500 kilometres in the time since the dinosaurs went extinct (and we believe India was moving several times faster than that prior to the collision).

The arrows show the direction and speed of motion of GPS stations relative to the interior of Eurasia. India is moving to the northeast at about 4 centimetres a year. The arrows reduce in size as this motion is accommodated by faulting, and change direction where crust is being shoved out of the way rather than getting crumpled up. From Gan et al., 2007

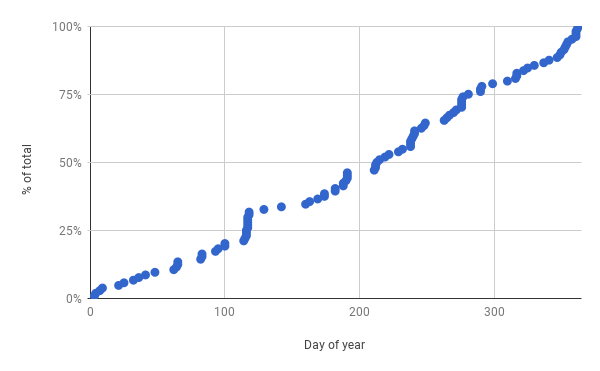

So, from this one rock, we can tell the story of how our planet changes; of how lands that were once at the bottom of a tropical ocean now lord it over the rest of the world’s topography. It’s a pretty good story. You may even have heard a similar one before. But what you may not have heard is that the story doesn’t end there. Because mountains don’t stay still. We’ve just seen that the collision that created the Himalayas is still going. India’s continued motion is pushing the Himalayas, including Mount Everest, ever higher, by around half a centimetre to a centimetre a year. However, even if the land beneath is going up that fast, the summit of Mount Everest is not. Another important Earth process is hard at work trying to grind the mountains down again.

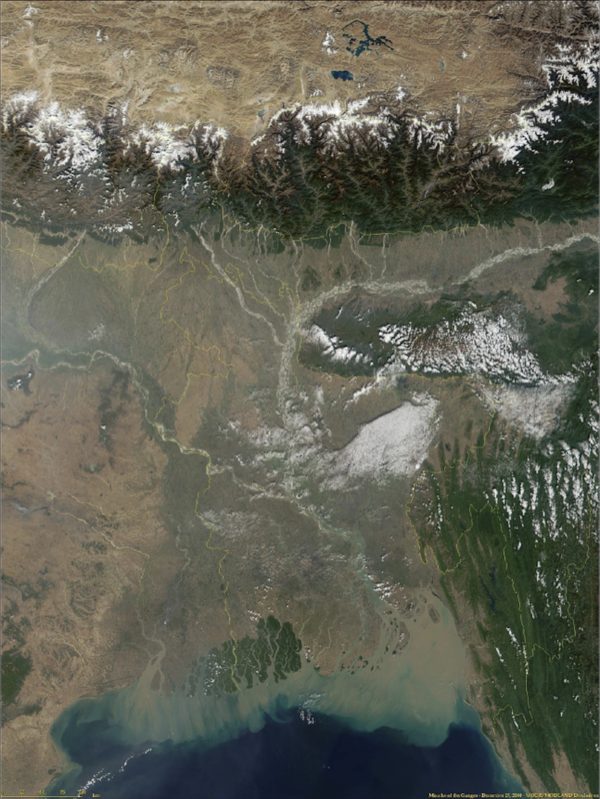

When I spent time in New Zealand doing fieldwork for my PhD, I learnt a phrase: ‘Tall Poppies Syndrome’. It describes how the act of standing out from the crowd focusses the crowds attention on you, and often triggers the desire to cut you down to size. In a similar way, elevated rocks are exposing themselves to the water cycle. Mountains create weather. Storms, freezing water, and flowing ice physically and chemically attack the rocks exposed on the peaks – including our limestone – and gradually weaken, fracture, and them break apart. Fragments large and small fall downhill onto the glaciers that fill the valleys. These icy conveyer belts, darkened by their load of debris, flow downhill, out of Himalayas.

Around Mount Everest, the blinding white of the snow on the peaks contrasts strongly with the dirty appearance of the glaciers in the surrounding valleys – glaciers covered with rocky debris produced by intense weathering and erosion. Image from NASA’s Earth Observatory

Eventually the glaciers melt, but the water continues to flow downhill, fast enough to carry all but the very largest boulders downhill with it. And water continues to chemically attack the rocks, breaking them down into their individual elements and carrying them downstream as dissolved ions. Because rainwater is slightly acidic, carbonate rocks are particularly prone to chemical disassembly: the rivers flowing out of the Himalayas are loaded with dissolved calcium and bicarbonate ions: the building blocks of future carbonate minerals.

And thus it is that the rocks that plate tectonics raised up are cut back down to size and returned to whence they came by water and gravity. When rivers reach the coast, the water slows and drops its load of sand and mud. New land – and eventually, new sedimentary rock – is built. The Bengal fan is a 16 kilometre thick pile of eroded debris, carried out of the Himalayas by the Ganges and Brahmaputra rivers. But the water, and its dissolved ionic passengers, does not stop. Wind driven currents move it onwards, until the chemical wreckage of the Himalayas is spread throughout every ocean basin, and in the waters of every shallow sea. Places such as the Bahamas, or the Persian Gulf.

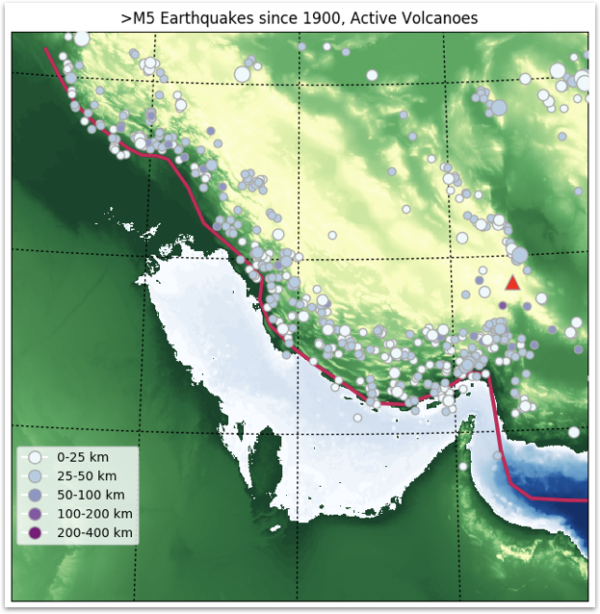

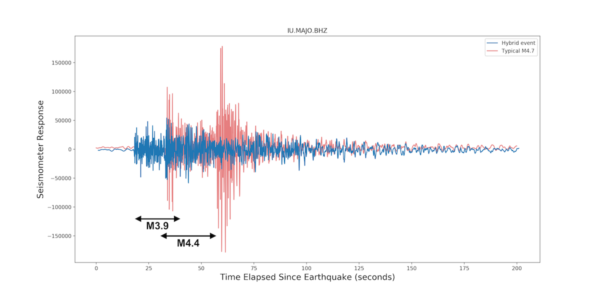

You can’t point to an individual particle in those clouds of new mineral grains and say, ‘that one contains calcium from Mount Everest!’, but some of them do. In a cycle that has spanned a whole planet and hundreds of millions of years, elements have moved from water in an ancient ocean, to rock at the bottom of that ocean, to rock at the highest point on the planet, back to the modern oceans, and then back to rock on the sea bed again. For a while, at least. Because Arabia is on the move. Iran is a hotbed of seismic activity as earthquakes accommodate plate convergence.

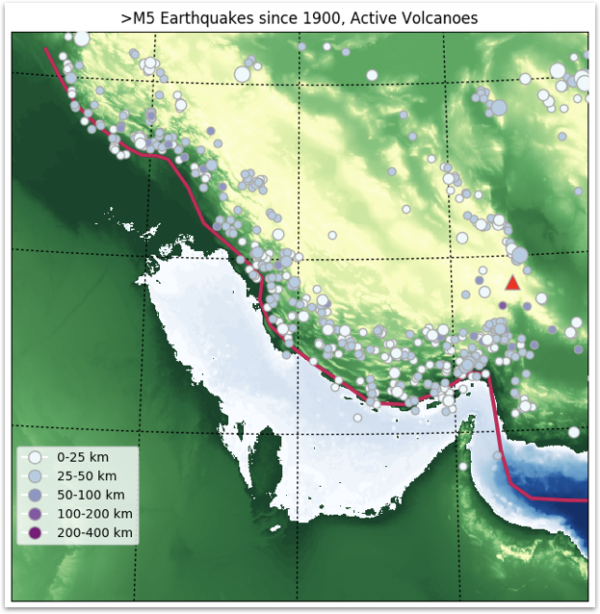

The concentrated band of seismicity in the Zagros Mountains on the north coast of the Persian Gulf, which continues along the Iran-Iraq border, is a zone of convergence generated by the north-east motion of the Arabian peninsula relative to Asia.

The grand cycles that make up the story of Earth history – cycles of rock, of water, of energy – will continue. The shallow sea now between Arabia and Iran will be thrown up, and crumpled up, and in a future mountain range, an intrepid geologist – maybe human-ish, maybe cockroach – will find a layered, fine-grained, deformed carbonate rock. Hard evidence that the Persian Gulf, long closed up, once existed – until its components are once more returned to a far-future ocean. It’s enough to give you (Cylon) religion.

“All of this has happened before, and all of this will happen again.”

Except, perhaps, for one thing. It is undeniably true that our understanding of how the Earth has operated up to this point can help us understand what the future has in store. But there is also a new geological force is at work, one the planet has not seen before: us. Our prodding of the planet may well push in into places it would not have gone without us. If anything, this makes understanding what makes this planet of ours tick even more important. We have found the accelerator; it would be nice to work out where the mirrors and the brakes are as well.

*I haven’t actually touched this rock myself, unfortunately: we have Callan Bentley to thank for the picture.

Nice plan for content warnings on Mastodon and the Fediverse. Now you need a Mastodon/Fediverse button on this blog.